For version 12.0 release of WASD VMS Web Services.

Published November 2021

Document generated using wasDOC version 2.0.0

This document describes the more significant features and facilities available with the WASD Web Services package.

For installation and update details see WASD Web Services - Installation

For detailed configuration information see WASD Web Services - Configuration

For information on CGI, CGIplus, ISAPI, OSU, etc., scripting, see WASD Web Services - Scripting

And for a description of WASD Web document, SSI and directory listing behaviours and options, WASD Web Services - Environment

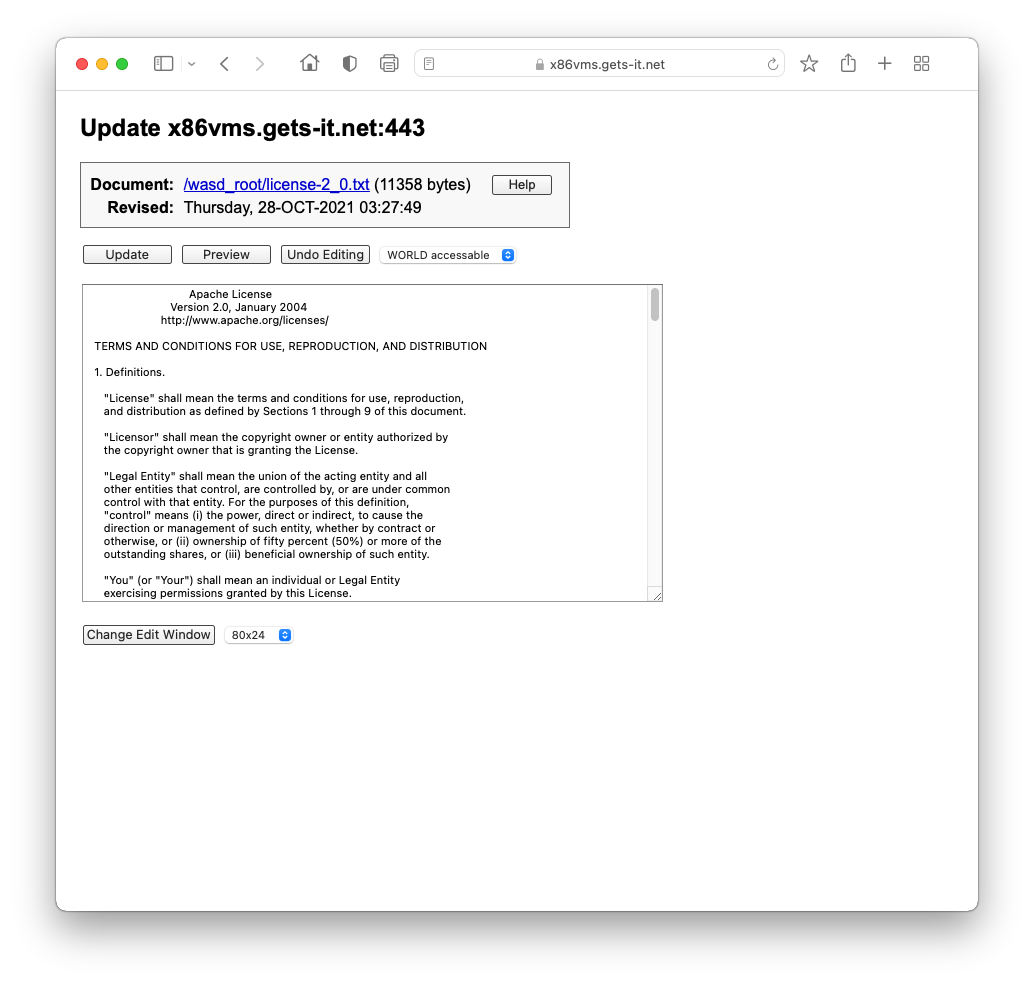

WASD VMS Web Services – Copyright © 1996-2021 Mark G. Daniel

Licensed under the Apache License, Version 2.0 (the "License");

https://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

Mark.Daniel@wasd.vsm.com.au

A pox on the houses of all spamers. Make that two poxes.

All copyright and trademarks within this document belong to their rightful owners. See 15. Attribution and Acknowledgement.

This is a static (file), single document.

Alternative multi-part static

and dynamic documents.

Links followed by ⤤ open in a new page.

| 1.1Troubleshooting? |

| ↩︎ | ↖︎ | ↑︎ | ↘︎ | ↪︎ |

With the installation, update and detailed configuration of the WASD Web Services package provided in WASD Web Services - Install and Config why have an introduction in this subsequent document? After getting the basics up and running (often the first thing we want to do) it's time to stop and consider the tool and what we're trying to accomplish with it. So this section provides an overview of the package's design philosophy, history and significant features and capabilities by topic.

The document assumes a basic understanding of Web technologies and uses terms without explaining them (e.g. HTTP, HTML, URL, CGI, SSI, etc.) The reader is refered to documents specifically on these topics.

WASD Web Services originated from a 1993 decision by Wide Area Surveillance Division (WASD) management (then High Frequency Radar Division, HFRD) to make as much information as possible, both administrative and research, available online to a burgeoning personal desktop workstation and PC environment (to use the current term … an intranet) using the then emerging Web technologies.

It then became the objective of this author to make all of our systems' VMS-related resources available via HTTP and HTML, regardless of the underlying data or storage format. An examination of the WASD package will show that this objective is substantially achieved.

Reasons for developing (remember; back in 1994!) a local HTTP server were few but compelling:

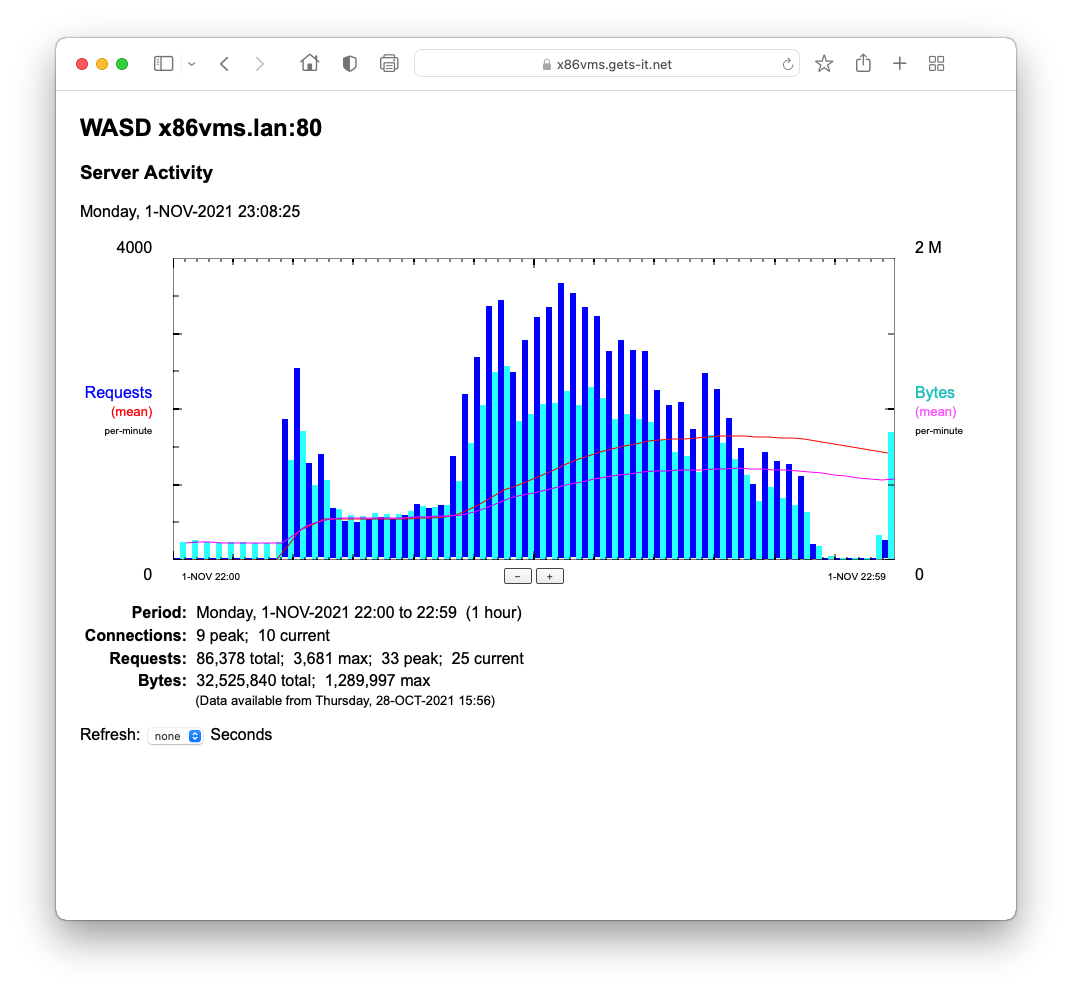

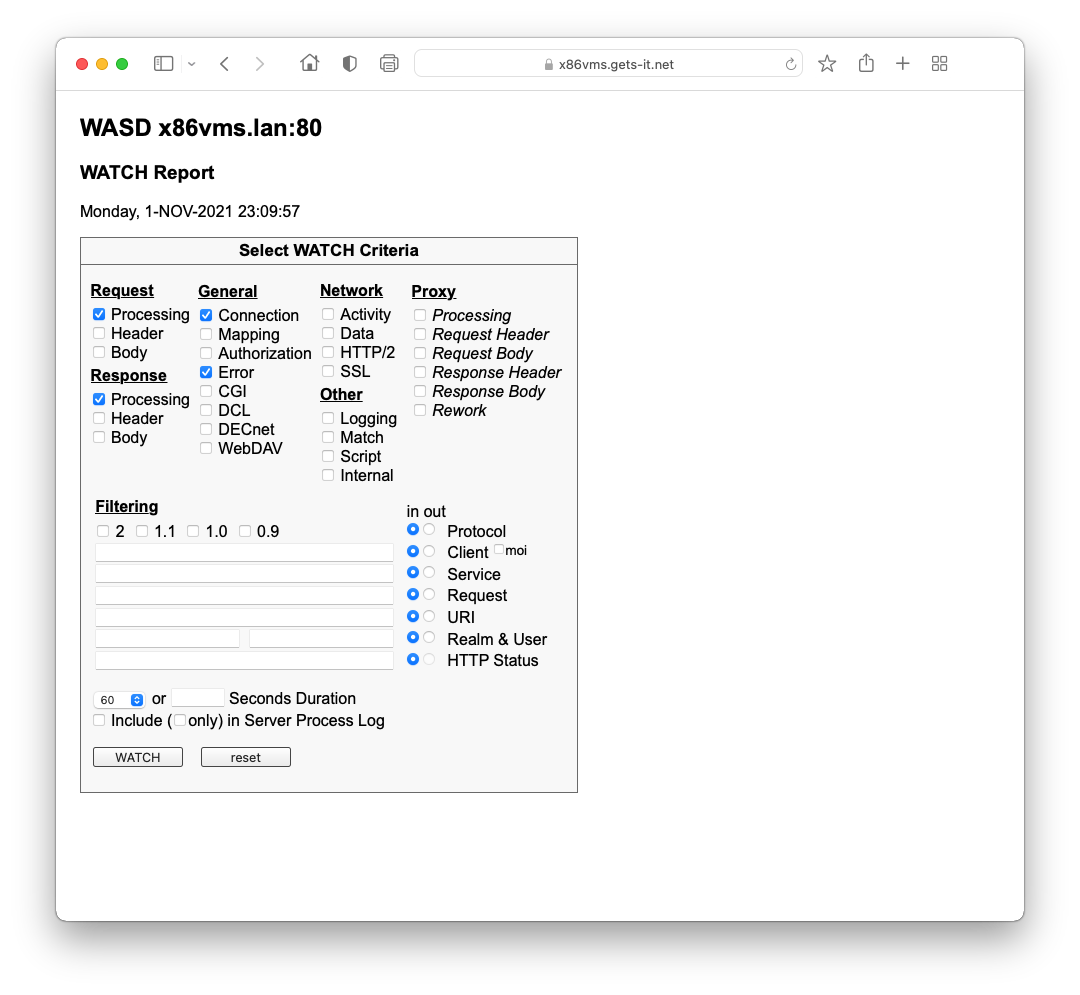

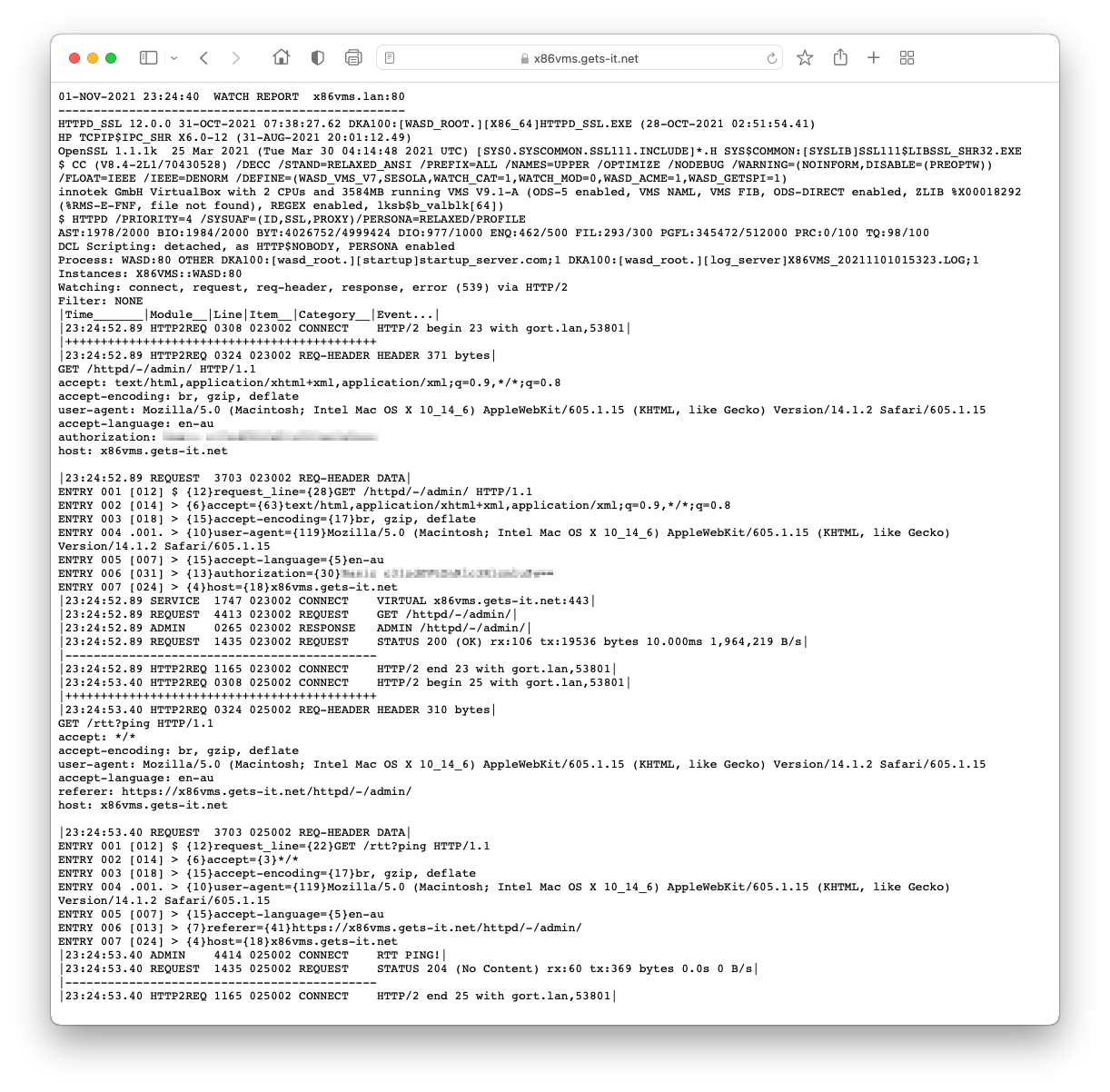

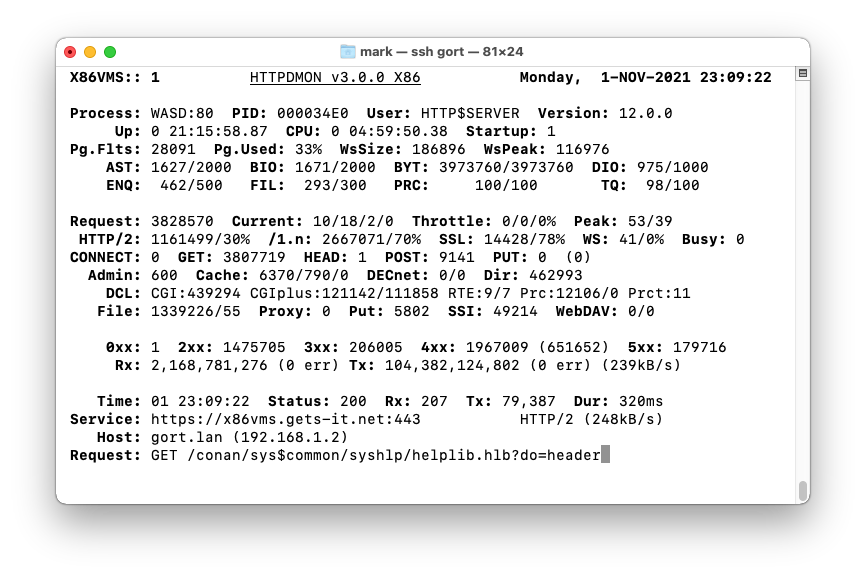

When initially installing or configuring WASD, and sometimes later where something breaks spectacularly, it is most useful to be able to gain insight into what the server is up to.

The go-to tool is WATCH (yes, all capitals, and for no other reason than it makes it stand out).

WATCH is described in detail in 10. WATCH Facility of this document.

For most circumstances WATCH can be made available for troubleshooting even if the configuration is significantly broken. This is done by using a skeleton-key to authorise special access into the server.

The skeleton-key is described in detail in 3.12 Skeleton-Key Authentication, also in this document.

TL;DR

Enable at the command-line with the username anything beginning with an underscore and at least 8 characters, same for the password length.

Then using a browser access any available service, entering the above username (including underscore) and password when prompted.

The service administration facilities (of which WATCH is one) are also available and useful.

| 2.1Server Behaviour |

| 2.2VMS Versions |

| 2.3TCP/IP Packages |

| 2.4International Features |

| ↩︎ | ↖︎ | ↑︎ | ↘︎ | ↪︎ |

The most fundamental component of the WASD VMS Web Services environment is the HTTP server (HyperText Transport Protocol Daemon, or HTTPd). WASD has a single-process, multi-threaded, asynchronous I/O design.

The following bullet-points summarise the features and facilities, many of which are described in significant detail in following chapters.

The technical aspects of server design and behaviour are described in WASD_ROOT:[SRC.HTTPD]READMORE.TXT

The WASD server is supported on any VMS version from V7.0 upwards, on Alpha, Itanium and x86-64 architectures. The current version (as of 2021), V8.4 Alpha and Itanium, as is commonly the case on VMS platforms, required nothing more than relinking. Obviously no guarantees can be made for yet-to-be-released versions but at a worst-case these should only require the same.

The WASD distribution and package organisation fully supports mixed-architecture clusters (Alpha, Itanium and/or x86-64 in the one cluster) as one integrated installation.

The WASD server uses the TCP/IP Services (UCX) BG $QIO interface. The following packages support this interface and may be used.

** any not unreasonably ancient version

*** this might be becoming a bit of a stretch

To deploy IPv6 services this package must support IPv6.

WASD provides a number of features that assist in the support of non-English and multi-language sites. These "international" features only apply to the server, not necessarily to any scripts!

A directory may contain language-specific variants of a basic document. When requesting the basic document name these variants are automatically and transparently provided as the response if one matches preferences expresses in the request's "Accept-Language:" request header field. Both text and non-text documents (e.g. images) may be provided using this mechanism.

Configuration information is provided in section Language Variants of WASD Configuration.

Generally the default character set for documents on the Web is ISO-8859-1 (Latin-1). The server allows the specification of any character set as a default for text document responses (plain and HTML). In addition, text document file types may be modified or additional ones specified that have a different character set associated with that type. Furthermore, specific character sets may be associated with mapping paths. A site can therefore relatively easily support multiple character set document resources.

In addition the server may be configured to dynamically convert one character set to another during request processing. This is supported using the VMS standard NCS character set conversion library.

For further information see [CharsetDefault], [CharsetConvert] and [AddType] in Alphabetic Listing of WASD Configuration.

The server uses an administrator-customizable database of messages that can contain multiple language instances of some or all messages, using the Latin-1 character set (ISO8859-1). Although the base English messages can be completely changed and/or translated to provide any message text required or desired, a more convenient approach is to supplement this base set with a language-specific one.

One language is designated the prefered language. This would most commonly be the language appropriate to the geographical location and/or clientele of the server. Another language is designated the base language. This must have a complete set of messages and is a fall-back for any messages not configured for the additional language. Of course this base language would most commonly be the original English version.

More than just two languages can be supported. If the browser has prefered languages set the server will attempt to match a message with a language in this preference list. If not, then the server-prefered and then the base language message would be issued, in that order. In this way it would be possible to simultaneously provide for English, French, German and Swedish audiences, just for example.

For message configuration information see Message Configuration of WASD Configuration.

Dates appearing in server-generated, non-administrative content (e.g. directory listings, not META-tags, which use Web-standard time formats) will use the natural language specified by any SYS$LANGUAGE environment in use on the system or specifically created for the server.

Virtual-server-associated mapping, authorization and character-sets allow for easy multiple language and environment sites. Further per-request tailoring may be deployed using conditional rule mapping described below. Single server can support multi-homed (host name) and multiple port services.

For virtual services information see Configuration Considerations of WASD Configuration.

Mapping rules map requested URL paths to physical or other paths (see Request Processing Configuration of WASD Configuration). Conditional rules are only applied if the request matches criteria such as prefered language, host address (hence geographical location to a certain extent), etc. This allows requests for generic documents (e.g. home pages) to be mapped to language versions appropriate to the above criteria.

For conditional mapping information see Conditional Configuration of WASD Configuration.

| ↩︎ | ↖︎ | ↑︎ | ↘︎ | ↪︎ |

Authentication is the verification of a user's identity, usually through username/password credentials. Authorization is allowing a certain action to be applied to a particular path based on authentication of the originator.

Generally, authorization is a two step process. First authentication, using a username/password database. Second authorization, determining what the username is allowed to do for this transaction.

Basic authorization was discussed in Authorization Configuration (Basics) of WASD Configuration. This section discusses all the aspects of WASD authentication and authorization.

By default, the logical name WASD_CONFIG_AUTH locates a common authorization rule file. Simple editing of the file and reloading into the running server changes the processing rules.

Server authorization is performed using a configuration file, authentication source, and optional full-access and read-only authorization grouping sources, and is based on per-path directives. There is no user-configured authorization necessary, or possible! In the configuration file paths are associated with the authentication and authorization environments, and so become subject to the HTTPd authorization mechanism. Reiterating … WASD HTTPd authorization administration involves those two aspects, setting authorization against paths and administering the authentication and authorization sources.

Authorization is applied to the request path (i.e. the path in the URL used by the client). Sometimes it is possible to access the same resource using different paths. Where this can occur care must be exercised to authorize all possible paths.

Where a request will result in script activation, authorization is performed on both script and path components. First script access is checked for any authorization, then the path component is independently authorized. Either may result in an authorization challenge/failure. This behaviour can be disabled using a path SETting rule, see SET Rule of WASD Configuration.

The authentication source name is refered to as the realm, and refers to a collection of usernames and passwords. It can be the system's SYSUAF database.

The authorization source is refered to as the group, and commonly refers to a collection of usernames and associated permissions.

The configuration file rules are scanned from first towards last, until a matching rule is encountered (or end-of-file). Generally a rule has a trailing wildcard to indicate that all sub-paths are subject to the same authorization requirements.

Rule matching is string pattern matching, comparing the request specified path, and optionally other components of the request when using configuration conditionals Conditional Configuration of WASD Configuration, to a series of patterns, until one of the patterns matches, at which stage the authorization characteristics are applied to the request and authentication processing is undertaken. If a matching pattern (rule) is not found the path is considered not to be subject to authorization. Both wildcard and regular expression based pattern matching is available String Matching of WASD Configuration.

A policy regarding when and how authorization can be used may be established on a per-server basis. This can restrict authentication challenges to "https:" (SSL) requests (4. Transport Layer Security), thereby ensuring that the authorization environment is not compromised by use in non-encrypted transactions. Two server qualifiers provide this.

Note also that individual paths may be restricted to SSL requests using either the mapping conditional rule configuration or the authorization configuration files. See Conditional Mapping of WASD Configuration.

In addition, the following configuration parameters have a direct role in an established authorization policy.

Details of authentication failures are logged to the server process log.

Failures may also be directed to the OPCOM facility OPCOM Logging of WASD Configuration.

Both paths and usernames have permissions associated with them. A path may be specified as read-only, read and write, write-only (yes, I'm sure someone will want this!), or none (permission to do nothing). A username may be specified as read capable, read and write capable, or only write capable. For each transaction these two are combined to determine the maximum level of access allowed. The allowed action is the logical AND of the path and username permissions.

The permissions may be described using the HTTP method names, or using the more concise abbreviations R, W, and R+W.

| Path/User | DELETE | GET | HEAD | POST | PROPFIND | PUT | WebDAV |

|---|---|---|---|---|---|---|---|

| READ or R | no | yes | yes | no | yes | no | no |

| WRITE or W | yes | no | no | yes | no | yes | yes |

| R+W | yes | yes | yes | yes | yes | yes | yes |

| NONE | no | no | no | no | no | no | no |

| DELETE | yes | yes | no | no | no | no | no |

| GET | no | yes | no | no | no | no | no |

| HEAD | no | no | yes | no | no | no | no |

| POST | no | no | no | yes | no | no | no |

| PROPFIND | no | no | no | no | yes | no | no |

| PUT | no | yes | no | no | no | yes | no |

| Other WebDAV | no | no | no | no | no | no | yes |

Requiring a particular path to be authorized in the HTTP transaction is accomplished by applying authorization requirements against that path in a configuration file. This is an activity distinct from setting up and maintaining any authentication/authorization databases required for the environment.

By default, the system-table logical name WASD_CONFIG_AUTH locates a common authorization configuration file, unless an individual rule file is specified using a job-table logical name. Simple editing of the file changes the configuration. Comment lines may be included by prefixing them with the hash "#" character, and lines continued by placing the backslash character "\" as the last character on a line.

The [IncludeFile] is a directive common to all WASD configuration, allowing a separate file to be included as a part of the current configuration. (see Include File Directive of WASD Configuration.

Configuration directives begin either with a "[realm]", "[realm;group]" or "[realm;group-r+w;group-r]" specification, with the forward-slash of a path specification, or with a "[AuthProxy]" or "[AuthProxyFile]" introducing a proxy mapping. Following the path specification are HTTP method keywords controlling group and world permissions to the path, and any access-restricting request scheme ("https:") and/or host address(es) and/or username(s).

Square brackets are used to enclose a [realm;group;group] specification, introducing a new authentication grouping. Within these brackets is specified the realm name (authentication source), and then optional group (authorization source) names separated by semi-colons. All path specifications following this are authenticated against the specified realm database, and permissions obtained from the group "[realm;group]" database (or authentication database if group not specified), until the next [realm;group;group] specification.

The following shows the format of an authentication source (realm) only directive.

This one, the format of a directive using both authentication and authorization sources (both realm and group).

The third variation, using an authentication, full-access (read and write) and read-only authorization sources (realm and two grouping).

The authentication source may also be given a description. This is the text the browser dialog presents during password prompting. See ‘Realm Description’ in 3.5 Authentication Sources.

Paths are usually specified terminated with an asterisk wildcard. This implies that any directory tree below this is included in the access control. Wildcards may be used to match any portion of the specified path, or not at all. Following the path specification are control keywords representing the HTTP methods or permissions that can be applied against the path, and optional access-restricting list of host address(es) and/or username(s), separated using commas. Access control is against either or both the group and the world. The group access is specified first followed by a semi-colon separated world specification. The following show the format of the path directive, see the examples below to further clarify the format.

The [AuthProxy] and [AuthProxyFile] directives introduces one or more SYSUAF proxy mappings (3.10.5 VMS Account Proxying).

The same path cannot be specified against two different realms for the same virtual service. The reason lies in the HTTP authentication schema, which allows for only one realm in an authentication dialog. How would the server decide which realm to use in the authentication challenge? Of course, different parts of a given tree may have different authorizations, however any tree ending in an asterisk results in the entire sub-tree being controlled by the specified authorization environment, unless a separate specification exists for some inferior portion of the tree.

There is a thirty-one character limit on authentication source names.

The following realm names are reserved and have special functionality.

By default accounts with SYSPRV authorized are always rejected to discourage the use of potentially significant usernames (e.g. SYSTEM). Accounts that are disusered, have passwords that have expired, or that are captive or restricted are also automatically rejected.

The authentication source may be disguised by giving it a specific description. This will the text the browser dialog presents during password prompting. See ‘Realm Description’ in 3.5 Authentication Sources.

See 3.10 SYSUAF-Authenticated Users for further information on these topics.

The following username is reserved.

If a host name, protocol identifier or username is included in the path configuration directive it acts to further limit access to matching clients (path and username permissions still apply). If more than one are included a request must match each. If multiple host names and/or usernames are included the client must match at least one of each. Host and username strings may contains the asterisk wildcard, matching one or more consecutive characters. This is most useful when restricting access to all hosts within a given domain, etc. In addition a VMS security profile may be associated with the request.

These examples show the use of a network mask to restrict based on the source network of the client. The first, four octets supplied as a mask. The second a VLSM used to specify the length of the network component of the address.

These examples both specify a 6 bit subnet. With the above examples the host 131.185.250.250 would be accepted, but 131.185.250.50 would be rejected.

Note that it more efficient to place protocol and host restrictions at the front of a list.

Authentication credentials may be validated against one of several sources, each with different characteristics.

An identifier is indicated by appending a "=ID" to the name of the realm or group. Also refer to 3.10.3 Rights Identifiers.

Whether or not any particular username is allowed to authenticate via the SYSUAF may be controlled by that account holding or not holding a particular rights identifier. Placing "=ID" against realm name implies the username must exist in the SYSUAF and hold the specified identifier name.

When (and only when) a username has been authenticated via the SYSUAF, rights identifiers associated with that account may be used to control the level-of-access within that realm. This is in addition to any identifier controlling authentication itself.

In this example a username would need to hold the PROJECT_A identifier to be able to authenticate, PROJECT_A_LIBRARIAN to write the path(s) (via POST, PUT) and PROJECT_A_USER to be able to read the path(s).

The server system SYSUAF may be used to authenticate usernames using the VMS account name and password. The realm being VMS may be indicated by using the name "VMS", by appending "=VMS" to another name making it a VMS synonym, or by giving it a specific description ( in ). Further information on SYSUAF authentication may be found in . These examples illustrate the general idea.

Three Authentication and Credential Management Extension (ACME) agents are currently available (as at VMS V8.3 and WASD v9.3), "VMS" (SYSUAF), "MSV1_0" (Microsoft domain authentication used by Advanced Server) and an LDAP kit. There is also an API that will allow local or third-party agents to be developed. WASD ACME authentication is completely asynchronous and so agents that make network or other relatively latent queries will not add granularity into server processing. By default ACME is used to authenticate requests against the SYSUAF on Alpha and Itanium running VMS V7.3 or later (3.10.1 ACME).

For authorization rules explicitly specifying ACME the Domain Of Interpretation (DOI) becomes the realm name, interposed between the relam description and the ACME authentication source keyword. In this first example the DOI is VMS and so all WASD SYSUAF authentication capabilities are available.

In the second example authentication is performed using the same credentials as Advanced Server running on the local system.

In this final example the DOI is a third-party agent.

A plain-text list may be used to provide usernames for group membership. The format is one username per line, at the start of the line, with optional, white-space delimited text continuing along the line (which could be used as documentation). Blank lines and comment lines are ignored. A line may be continued by ending it with a "\" character. These files may, of course, be created and maintained using any plain text editor. They must exist in the WASD_AUTH: directory, have an extension of ".$HTL", and do not need to be world accessible.

Simple lists are indicated in the configuration by appending a "=LIST" to the name.

It also possible to use a simple list for authentication purposes. The plain-text password is appended to the username with a trailing equate symbol. Although in general this is not recommended as everything is stored as plain-text it may be suitable as an ad hoc solution in some circumstances. The following example shows the format.

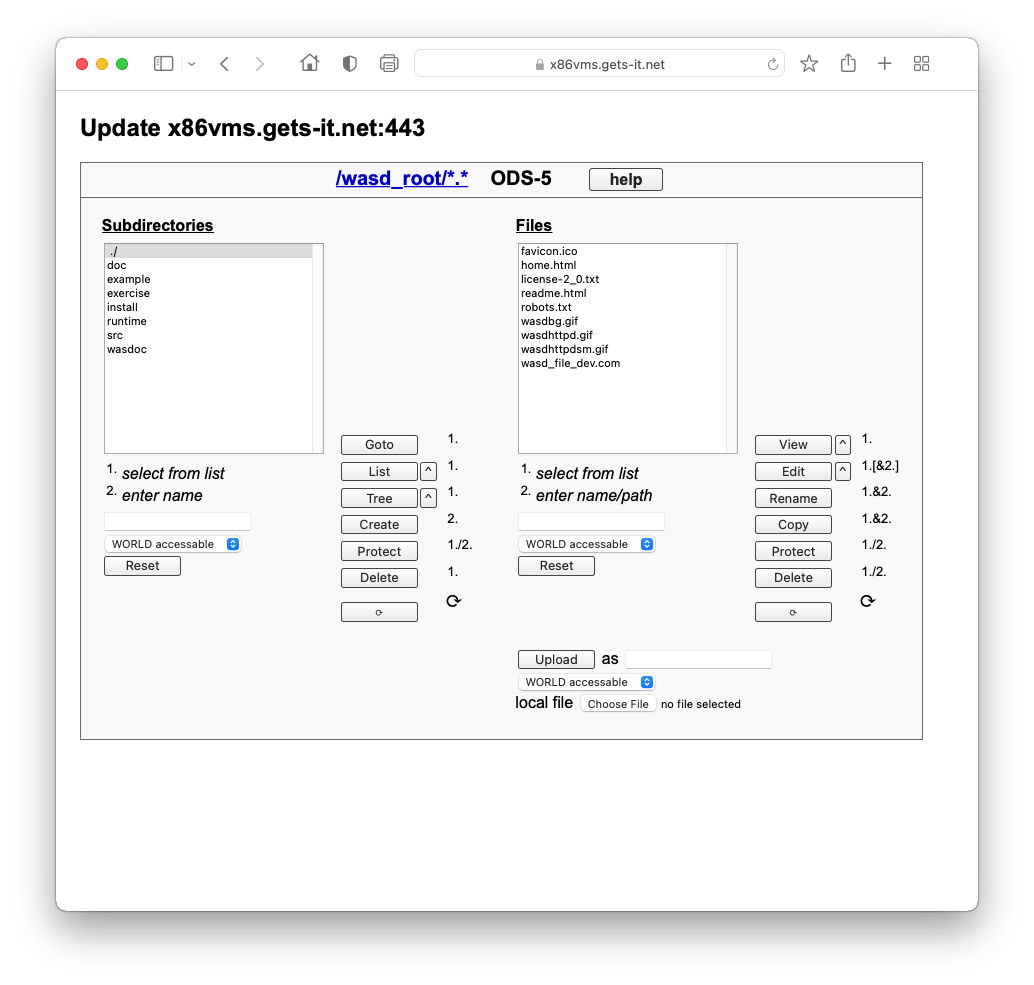

These are binary, fixed 512 byte record files, containing authentication and authorization information. HTA databases may be used for authentication and group membership purposes. The content is much the same, the role differs according to the location in the realm directive. These databases may be administered using the online Server Administration facility (9.5 HTTPd Server Revise) or the HTAdmin command-line utility (13.8 HTAdmin). They are located in the WASD_AUTH: directory and have an extension of ".$HTA".

(Essentially for historical reasons) HTA databases are the default sources for authorization information. Therefore, using just a name, with no trailing "=something", will configure an HTA source. Also, and recommended for clearly showing the intention, appending the "=HTA" qualifier specifies an HTA database. The following example show some of the variations.

Uses X.509 v3 certificates (browser client certificates) to establish identity (authentication) and based on that identity control access to server resources (authorization). This is only available for SSL transactions. See 4. Transport Layer Security for further information on SSL, and 4.5.12 Authorization Using X.509 Certification on X509 realm authorization.

From RFC1413 (M. St.Johns, 1993) …

The Identification Protocol is not intended as an authorization or access control protocol. At best, it provides some additional auditing information with respect to TCP connections. At worst, it can provide misleading, incorrect, or maliciously incorrect information.

Nevertheless, RFC1413 may be useful for some purposes in some heterogeneous environments, and so has been made available for authentication purposes.

The RFC1413 realm generates no browser username/password dialog. It relies on the system supporting the client to return a reliable identification of the user accessing the HTTP server by looking-up the user of the server connection's peer port.

An authorization agent is a CGI-compliant CGIplus script that is specially activated during the authorization processing. Using CGI environment variables it gets details of the request, makes an assessment based on its own internal authentication/authorization processing, and using the script callout mechanism returns the results to the server, which then acting on these, allows or denies access.

Such agents allow a site to develop local authentication/authorization mechnisms relatively easily, based on CGI principles. A discussion of such a development is not within the scope of this section, see the WASD Web Services - Scripting document for information on the use of callouts, and the example and working authorization agents provided in the WASD_ROOT:[SRC.AGENT] directory. The description at the beginning of these programs covers these topics in some detail.

An authorization agent would be configured using something like the following, where the "AUTHAGENT" is the actual script name doing the authorization. This has the the path "/cgiauth-bin/" prepended to it.

It is possible to supply additional, per-path information to an agent. This can be any free-form text (up to a maximum length of 63 characters). This might be a configuration file location, as used in the example CEL authenticator. For example

Generally authorization agent scripts use 401/WWW-Authorize: transactions to establish identity and credentials. It is possible for an agent to establish identity outside of this using mechanisms available only to itself. In this case it is necessary suppress the usually automatic generation of username/password dialogs using a realm of agent+opaque

An older mechanism required a leading parameter of "/NO401". It is included here only for reference. The agent+opaque realm should now always be used.

It is necessary to have the following entry in the WASD_CONFIG_MAP configuration file:

This allows authentication scripts to be located outside of the general server tree if desired.

A token is a short-lived, cookie delivered, representation of authentication established in another context. Originally devised to allow controlled access to very large datasets without the overhead of SSL in the transmission but with access credentials supplied in the privacy of an SSL connection. The cookie contains NO CREDENTIAL data at all and the authenticator manages an internal database of these so it can determine whether any supplied token is valid and when that token has expired. By default (and commonly) token authorisation occurs in non-SSL space (http:) and the credential authorisation in SSL space (https:).

Token authorisation is described in 3.11 Token Authentication).

Instead of a list of usernames contained in a database, a group within a realm (either or both full-access-source or read-only-source, see 3.4 Authorization Configuration File) may be specified as a host, group of hosts or network mask. This acts to restrict all requests from clients not matching the IP address specification. Unlike the per-path access restrict list (‘Access Restriction Keywords’ in 3.4 Authorization Configuration File) this construct applies to all paths in the realm. It also offers relative efficiencies over restriction lists and lends itself to some environments based on per-host identification (e.g. the RFC1413 realm). Note that IP addresses can be spoofed (impersonated) so this form of access control should be deployed with some caution.

The examples of realm specifications above all act to restrict read-write access via the RFC1413 realm to hosts within the 131.185.250.nnn subnet.

Generally the WASD model is for the server to perform authorisation processing and so the password never becomes visible at the application level. For scripting environments performing their own authentication the server will decode and parse the request "Authorization:" header for paths under the EXTERNAL realm.

The various authentication data are then provided in the CGI variables

If the script is performing its own authentication and authorisation using the raw request header then the server needs to be advised of this by placing the required paths under the OPAQUE realm.

The server will then provide only the "Authorization:" header data in the cgi variable HTTP_AUTHORIZATION from which the username and password may processed.

A realm directive may contain one or more different types of authorization information source, with the following restrictions.

It is possible to supply text describing the authentication realm to the browser user that differs from the actual source name. This may be used to disguise the actual source or to provide a more informative description than the source name conveys.

Prefixing the actual realm source name with a double-quote delimited string (of up to 31 characters) and an equate symbol will result in the string being sent to a browser as the realm description during an authentication challenge. Here are some examples.

WASD authorization offers a number of combinations of access control. This is a summary. Please note that when refering to the level-of-access a particular username may be allowed (read-only or full, read-write access), that it is always moderated by the level-of-access provided with a path configured within that realm. See 3.3 Permissions, Path and User.

When a path is controlled by a realm that comprises an authentication source only, as in this example

Where a group membership source is provided following the authentication source, as illustrated in this example

When a second group is specified, as in

The second group may be specified as an asterisk wildcard ("*") which is interpreted as everyone else (i.e. everyone else gets read-only access).

As described in Virtual Services of WASD Configuration, virtual service syntax may be used with authorization mapping to selectively apply rules to one specific service. This example provides the essentials of using this syntax. Note that service-specific and service-common rules may be mixed in any order allowing common authorization environments to be shared.

The online Server Administration facility path authorization report (9.4 HTTPd Server Reports) provides a selector allowing the viewing and checking of rules showing all services or only one particular virtual server, making it simpler to see exactly what any particular service is authorizing against.

Mixed case is used in the configuration examples (and should be in configuration files) to assist in readability. Rule interpretation however is completely case-insensitive.

WASD authorization allows for very simple authorization environments and provides the scope for quite complex ones. The path authentication scheme allows for multiple, individually-maintained authentication and authorization databases that can then be administered by autonomous managers, applying to widely diverse paths, all under the ultimate control of the overall Web administrator.

Fortunately great complexity is not generally necessary.

Most sites would be expected to require only an elementary setup allowing a few selected Web information managers the ability to write to selected paths. This can best be provided with the one authentication database containing read and write permissions against each user, with and access-restriction list against individual paths.

For example. Consider a site with three departments, each of which wishes to have three representatives capable of administering the departmental Web information. Authentication is via the SYSUAF. Web administrators hold an approriate VMS rights identifier, "WEBADMIN". Department groupings are provided by three simple lists of names, including the Web administrators (whose rights identifier would not be applied if access control is via a simple list), a fourth lists those with read-only access into the Finance area. The four grouping files would look like:

The authorization configuration file then contains:

Access to authentication sources, SYSUAF, simple lists and HTA databases, are relatively expensive operations. To reduce the impact of this activity on request latency and general server performance, authentication and realm-associated permissions for each authenticated username are stored in a cache. This means that only the initial request needs to be checked from appropriate databases, subsequent ones are resolved more quickly and efficiently from cache.

Such cached entries have a finite lifetime associated with them. This ensures that authorization information associated with that user is regularly refreshed. This period, in minutes, is set using the [AuthCacheMinutes] configuration parameter. Zero disables caching with a consequent impact on performance.

Where-ever a cache is employed there arises the problem of keeping the contents current. The simple lifetime on entries in the authentication cache means they will only be checked for currency whenever it expires. Changes may have occured to the databases in the meantime.

Generally there is are other considerations when adding user access. Previously the user attempt failed (and was evaluated each time), now the user is allowed access and the result is cached.

When removing or modifying access for a user the cached contents must be taken into account. The user will continue to experience the previous level of access until the cache lifetime expires on the entry. When making such changes it is recommended to explicitly purge the authentication cache either from the command line using /DO=AUTH=PURGE (9.7 HTTPd Command Line) or via the Server Administration facility (9. Server Administration). Of course the other solution is just to disable caching, which is a less than optimal solution.

The ability to authenticate using the system's SYSUAF is controlled by the server /SYSUAF[=keyword] qualifier. By default it is disabled.

By default accounts with SYSPRV authorized are always rejected to discourage the use of potentially significant usernames (e.g. SYSTEM). This behaviour can be changed through the use of specific identifiers, see 3.10.3 Rights Identifiers immediately below. Accounts that are disusered, have passwords that have expired or that are captive or restricted are always rejected. Accounts that have access day/time restricting access will have those restrictions honoured (see 3.10.3 Rights Identifiers for a workaround for this).

Also see 3.10.6 Nil-Access VMS Accounts.

By default the Authentication and Credential Management Extension (ACME) is used to authenticate SYSUAF requests on Alpha and Itanium running VMS V7.3 or later (3.5 Authentication Sources). The advantage of ACME is with the processing of the (rather complex) authentication requirements by a vendor-supplied implementation. It also allows SYSUAF password change to be made subject to the full site policy (password history, dictionary checking, etc.) which WASD does not implement.

By default SYSUAF authentication uses the NETWORK access restriction from the account SYSUAF record. Alternatives LOCAL, DIALUP and REMOTE may be specified using global configuration directive

Whether or not any particular username is allowed to authenticate via the SYSUAF may be controlled by that account holding or not holding a particular VMS rights identifier. When a username has been authenticated via the SYSUAF, rights identifiers associated with that account may be used to control the level-of-access within that realm.

Use of identifiers for these purposes are enabled using the /SYSUAF=ID server startup qualifier.

The first three reserved identifier names are optional. A warning will be reported during startup if these are not found. The fourth must exist if SYSUAF proxy mappings are used in a /SYSUAF=ID environment.

Identifiers may be managed using the following commands. If unsure of the security implications of this action consult the relevant VMS system management security documentation.

They can then be provided to desired accounts using commands similar to the following:

Be aware that, as with all successful authentications, and due to the WASD internal authentication cache, changing database contents does not immediately affect access. Any change in the RIGHTSLIST won't be reflected until the cache entry expires or it is explicitly flushed ().

Any authentication realm can have its usernames mapped into VMS usernames and the VMS username used as if it had been authenticated from the SYSUAF. This is a form of proxy access.

When a proxy mapping occurs request user authorization detail reflects the SYSUAF username characteristics, not the actual original authentication source. This includes username, user details (i.e. becomes that derived from the owner field in the SYSUAF), constraints on the username access (e.g. SSL only), and user capabilities including any profile if enabled. Authorization source detail remains unchanged, reflecting the realm, realm description and group of the original source. For CGI scripting an additional variable, WWW_AUTH_REMOTE_USER, provides the original remote username.

For each realm, and even for each path, a different collection of mappings can be applied. Proxy entries are strings containing no white space. There are three basic variations, each with an optional host or network mask component.

The "SYSUAF" is the VMS username being mapped to. The remote is the remote username (CGI variable WWW_REMOTE_USER). The first variation maps a matching remote username (and optional host/network) onto the specific SYSUAF username. The second maps all remote usernames (and optional host/network) to the one SYSUAF username (useful as a final mapping). The third maps all remote usernames (optionally on the remote host/network) into the same SYSUAF username (again useful as a final mapping if there is a one-to-one equivalence between the systems).

Proxy mappings are processed sequentially from first to last until a matching rule is encountered. If none is found authorization is denied. Match-all and default mappings can be specified.

In this example the username bloggs on system 131.185.250.1 can access as if the request had been authenticated via the SYSUAF using the username and password of FRED, although of course no SYSUAF username or password needs to be supplied. The same applies to the second mapping, doe on the remote system to JOHN on the VMS system. The third mapping disallows a system account ever being mapped to the VMS equivalent. The fourth, wildcard mapping, maps all accounts on all systems in 131.185.250.0 8 bit subnet to the same VMS username on the server system. The fifth mapping provides a default username for all other remote usernames (and used like this would terminate further mapping).

Note that multiple, space-separated proxy entries may be placed on a single line. In this case they are processed from left to right and first to last.

Proxy mapping rules should be placed after a realm specification and before any authorization path rules in that realm. In this way the mappings will apply to all rules in that realm. It is possible to change the mappings between rules. Just insert the new mappings before the (first) rule they apply to. This cancels any previous mappings and starts a new set. This is an example.

An alternative to in-line proxy mapping is to provide the mappings in one or more independent files. In-line and in-file mappings may be combined.

To cancel all mappings for following rules use an [AuthProxy] (with no following mapping detail). Previous mappings are always cancelled with the start of a new realm specification. Where proxy mapping is not enabled at the command line or a proxy file cannot be loaded at startup a proxy entry is inserted preventing all access to the path.

REMEMBER – proxy processing can be observed using the WATCH facility.

It is possible, and may be quite effective for some environments, to have a SYSUAF account or accounts strictly for HTTP authorization, with no actual interactive or other access allowed to the VMS system itself. This would relax the caution on the use of SYSUAF authentication outside of SSL transactions. An obvious use would be for the HTTP server administrator. Additional accounts could be provided for other authorization requirements, all without compromising the system's security.

In setting up such an environment it is vital to ensure the HTTPd server is started using the /SYSUAF=ID qualifier (3.2 Authentication Policy). This will require all SYSUAF-authenticated accounts to possess a specific VMS resource identifier, accounts that do not possess the identifier cannot be used for HTTP authentication. In addition the identifier WASD_NIL_ACCESS will need to be held (3.10.3 Rights Identifiers), allowing the account to authenticate despite being restricted by REMOTE and NETWORK time restrictions.

To provide such an account select a group number that is currently unused for any other purpose. Create the desired account using whatever local utility is used then activate VMS AUTHORIZE and effectively disable access to that account from all sources and grant the appropriate access identifier (see 3.10.3 Rights Identifiers above).

When SSL is in use (4. Transport Layer Security) the username/password authentication information is inherently secured via the encrypted communications of SSL. To enforce access to be via SSL add the following to the WASD_CONFIG_MAP configuration file:

Note that this mechanism is applied after any path and method assessment made by the server's authentication schema.

The qualifier /SYSUAF=SSL provides a powerful mechanism for protecting SYSUAF authentication, restricting SYSUAF authenticated transactions to the SSL environment. The combination /SYSUAF=(SSL,ID) is particularly effective.

Also see 3.2 Authentication Policy.

It is possible to control access to files and directories based on the VMS security profile of a SYSUAF-authenticated remote user. This functionality is implemented using VMS security system services involving SYSUAF and RIGHTSLIST information. The feature must be explicitly allowed using the server /PROFILE qualifier. By default it is disabled.

When a SYSUAF-authenticated user (i.e. the VMS realm) is first authenticated a VMS security-profile is created and stored in the authentication cache (3.9 Authorization Cache). A cached profile is an efficient method of implementing this as it obviously removes the need of creating a user profile each time a resource is assessed. If this profile exists in the cache it is attached to each request authenticated for that user. As it is cached for a period, any change to a user's security profile in the SYSUAF or RIGHTSLIST won't be reflected in the cached profile until the cache entry expires or it is explicitly flushed (9.6 HTTPd Server Action).

When a request has this security profile all accesses to files and directories are assessed against it. When a file or directory access is requested the security-profile is employed by a VMS security system service to assess the access. If allowed, it is provided via the SYSTEM file protection field. Hence it is possible to be eligible for access via the OWNER field but not actually be able to access it because of SYSTEM field protections! If not allowed, a "no privilege" error is generated.

Once enabled using /PROFILE it can be applied to all SYSUAF authenticated paths, but must be enabled on a per-path basis, using the WASD_CONFIG_AUTH profile keyword (‘Access Restriction Keywords’ in 3.4 Authorization Configuration File)

Of course, this functionality only provides access for the server, IT DOES NOT PROPAGATE TO ANY SCRIPT ACCESS. If scripts must have a similar ability they should implement their own scheme (which is not too difficult, see WASD_ROOT:[SRC.MISC]CHKACC.C) based on the CGI variable WWW_AUTH_REALM which would be "VMS" indicating SYSUAF-authentication, and the authenticated name in WWW_REMOTE_USER.

If the /PROFILE qualifier has enabled SYSUAF-authenticated security profiles, whenever a file or directory is assessed for access an explicit VMS security system service call is made. This call builds a security profile of the object being assessed, compares the cached user security profile and returns an indication whether access is permitted or forbidden. This is addition to any such assessments made by the file system as it is accessed.

This extra security assessment is not done for non-SYSUAF-authenticated accesses within the same server.

For file access this extra overhead is negligible but becomes more significant with directory listings ("Index of") where each file in the directory is independently assessed for access.

Much of a site's package directory tree is inaccessible to the server account. One use of the SYSUAF profile functionality is to allow authenticated accesss to all files in that tree. This can accomplished by creating a specific mapping for this purpose, subjecting that to SYSUAF authentication with /PROFILE behaviour enabled (3.10.8 SYSUAF Security Profile), and limiting the access to a SYSTEM group account. As all files in the WASD package are owned by SYSTEM the security profile used allows access to all files.

The following example shows a path with a leading dollar (to differentiate it from general access) being mapped into the package tree. The "set * noprofile" limits the application of this to the /$WASD_ROOT/ path (with the inline "profile").

This path is then subjected to SYSUAF authentication with access limited to an SSL request from a specific IP address (the site administrator's) and the SYSTEM account.

This is a niche authorisation environment for addressing niche requirements.

A token is an HTTP cookie delivered representation of authentication established in another context. Originally devised to allow controlled access to very large datasets without the overhead of SSL in the transmission but with access credentials supplied in the privacy of an SSL connection.

A common scenario is where the client starts off attempting to access a resource in non-SSL space which is controlled by token authentication. In the first instance the authenticator detects there is no access token present and redirects the client (browser) to the SSL equivalent of that space, where credentials can be supplied encrypted. In this example scenario the SSL area is controlled by WASD SYSUAF authentication (can be SSL client certificate, etc.) and the username/password is prompted for. When correctly entered this generates a token. The token is stored (with corresponding detail) as a record in a server-internal database and then returned to the browser as a set-cookie value.

With the token data stored the browser is transparently redirected back to the non-SSL space where the actual access is to be undertaken, this time the browser presenting the cookie containing the token. The authenticator examines the token, looking it up in the database. If found, has originated from the same IP address, represents the same authentication realm, and has not expired, it then allows the non-SSL space access to proceed, and in this example scenario the dataset transfer is initiated (in unencrypted clear-text). If the token is not found in the database or has expired, then the process is repeated with a redirect back into SSL space. If the realms differ a 403 forbidden response is issued (see configuration below).

The token itself is a significant sequence of pseudo-random characters, is short-lived (configurable as anything from a few seconds to a few tens of seconds, or more), and as a consequence is frequently regenerated. The token is just that, containing no actual credential data at all. It might be possible to snoop but as it contains nothing of value in itself, expires relatively quickly, and has an originating IP address check, the fairly remote risk of playback is just that.

The authenticator does all the work, implicitly redirecting the user from non-SSL space to SSL space for the original authentication, and then back again with the token used for access in the non-SSL space. With the expiry of a token it undertakes that cycle again, redirecting back to the SSL-space where the browser-cached credentials will be supplied automatically allowing the fresh token to be issued, and then redirected back into non-SSL space for access. To emphasise - all this is transparent to the user.

As a consequence of this model the resource being controlled can ONLY be accessed from non-SSL space using the controlled path. To access the same resource from SSL space a distinct path to the resource must be provided.

As token authorisation relies on the client agent having HTTP cookies enabled (globally or specifically for the site) it is useful to have this tested for and/or advised about, on some related but other area of the site. There are simple techniques using JavaScript for detecting the availability of cookie processing. Search the Web for a suitable solution.

The automatic authorisation and redirection occurs using a combination of two distinguishable authorisation rules, one for supplying the credentials, the other for using the token for authorisation. In this example (and commonly) the resources are at "/location/" and the configuration accepts user-supplied credentials in SSL space and uses the token in non-SSL space. The asterisk just indicates that in the absence of any other parameter this authorisation rule has a complementary token rule where access will actually occur.

And in this example, the same arrangement but with non-standard ports (specified using an integer with a leading colon).

To prevent potential thrashing, where multiple, distinct realms within a single request are authorised using tokens, corresponding multiple token (cookie) names must be used. It is expected that this would be an uncommon but not impossible scenario. The "thrashing" would be a result of authorisation associated with a single, particular token name. Where a realm differs from a previous token generated another is required. The token authorisation scheme forces the use of distinct token names by 403-forbidding change of realm using the one token. Use explicitly specified, independent token (cookie) names, or an integer preceded by an ampersand (which appends the integer to the base token name), ensuring the complementary rules are using the same name/integer.

For the final example, the token is contained in the non-default cookie named "Wasd_example" and the authentication performed using an X509 client certificate (which can only be supplied via SSL).

Some additional detail is available from the AUTHTOKEN.C code module.

Provides a username and password that is authenticated from data placed into the global common (i.e. in memory) by the site administrator. The username and password expire (become non-effective) after a period, one hour by default or an interval specified when the username and password are registered.

It is a method for allowing ad hoc authenticated access to the server, primarily intended for non-configured access to the online Server Administration facilities (9.1 Access Before Configuration) but is available for other purposes where a permanent username and password in an authentication database is not necessary. A skeleton-key authenticated request is subject to all other authorization processing (i.e. access restrictions, etc.), and can be controlled using the likes of '~_*', etc.

The site administrator uses the command line directive

The username must begin with an underscore (to reduce the chances of clashing with a legitimate username) and have a minimum of 6 other characters. The password is delimited by a colon and must be at least 8 characters. The optional period in minutes can be from 1 to 10080 (one week). If not supplied it defaults to 60 (one hour). After the period expires the skeleton key is no longer accepted until reset.

The authentication process (with skeleton-key) is performed using these basic steps.

Note that the authenticator resumes looking for a username from a configured authentication source unless the request and skeleton-key usernames match. After that the passwords either match allowing access or do not match resulting in an authentication failure.

The server account should have no direct write access to into any directory structure. Files in these areas should be owned by SYSTEM ([1,4]). Write access for the server into VMS directories (using the POST or PUT HTTP methods) should be controlled using VMS ACLs. This is in addition to the path authorization of the server itself of course! The recommendation to have no ownership of files and provide an ACE on required directories prevents inadvertant mapping/authorization of a path resulting in the ability to write somewhere not intended.

Two different ACEs implement two grades of access.

This example shows a suitable ACE that applies only to the original directory:

This example shows a suitable ACE that applies only to the original directory:

To assist with the setting of the required ACEs an example, general-purpose DCL procedure is provided, WASD_ROOT:[EXAMPLE]AUTHACE.COM).

Some sites may be sensitive enough about Web resources that the possibility of providing inadvertant access to some area or another is of major concern. WASD provides a facility that will automatically deny access to any path that does not appear in the authorization configuration file. This does mean that all paths requiring access must have authorization rules associated with them, but if something is missed some resource does not unexpectedly become visible.

At server startup the /AUTHORIZE=ALL qualifier enables this facility.

For paths that require authentication and authorization the standard realms and rules apply. To indicate that a particular path should be allowed access, but that no authorization applies the "NONE" realm may be used. The following example provides some indication of how it should be used.

There is also a per-path equivalent of the /AUTHORIZE=ALL functionality, described in SET Rule of WASD Configuration). This allows a path tree to be require authorization be enabled against it.

The server provides for users to be able to change their own HTA passwords (and SYSUAF if required). This functionality, though desirable from the administrator's viewpoint, is not mandatory if the administrator is content to field any password changes, forgotten passwords, etc. Keep in mind that passwords, though not visible during entry, are passed to the server using clear-text form fields (which is why SSL is recommended).

Password modification is enabled by including a mapping rule to the internal change script. For example:

Any database to be enabled for password modification must have a writable authorization path associated with it. For example:

Use some form of cautionary wrapper if providing this functionality over something other than an Intranet or SSL connection:

When using SYSUAF authentication it is possible for a password to pre-expired, or when a password lifetime is set for a password to expire and require respecification. By default an expired password cannot be used for access. This may be overridden using the following global configuration directive.

Expired passwords may be specially processed by specifying a URL with WASD_CONFIG_GLOBAL [AuthSysUafPwdExpURL] configuration directive Alphabetic Listings of WASD Configuration).

The WASD_CONFIG_MAP set auth=sysuaf=pwdexpurl=<string> rule allows the same URL to be specified on a per-path basis. When this is set a request requiring SYSUAF authentication that specifies a username with an expired password is redirected to the specified URL. This should directly or via an explanatory (wrapper) page redirect to the password change path described above. The password change dialog will have a small note indicating the password has expired and allows it to be changed.

The following WASD_CONFIG_GLOBAL directive

It is also possible to redirect an expired password to a site-specific page for input and change. This allows some customization of the language and content of the expired password change dialog. An example document is provided at WASD_ROOT:[EXAMPLE]EXPIRED.SHTML (what it looks like) ready for relocation and customisation. Due to the complexities of passing realm information and then submitting that information to the server-internal change facility some dynamic processing is required via an SSI document.

This example assumes the site-specific document has been located at WEB:[000000]EXPIRED.SHTML and is accessed using SSL.

The reason authorization information is not required to be reentered on subsequent accesses to controlled paths is cached information the browser maintains. It is sometimes desirable to be able to access the same path using different authentication credentials, and correspondingly it would be useful if a browser had a purge authorization cache button, but this is commonly not the case. To provide this functionality the server must be used to "trick" the browser into cancelling the authorization information for a particular path.

This is achieved by adding a specific query string to the path requiring cancellation. The server detects this and returns an authorization failure status (401) regardless of the contents of request "Authorization:" field. This results in the browser flushing that path from the authorization cache, effectively requiring new authorization information the next time that path is accessed.

There are two variations on this mechanism.

Also when using logout with a revalidation period a redirection URL may be appended to the logout query string. It then redirects to the supplied URL. It is important that the redirection is returned to the browser and not handled internally by WASD. Normal WASD redirection functionality applies.

These examples redirect to

| ↩︎ | ↖︎ | ↑︎ | ↘︎ | ↪︎ |

Transport Layer Security (TLS), and its predecessor Secure Sockets Layer (SSL), are cryptographic protocols designed to provide communication privacy over a network, in the case of HTTP between the browser (client) and the server. It also authenticates server and optionally client identity. TLS/SSL operates by establishing an encrypted communication path between the two applications, "wrapping" the entire application protocol inside the secure link, providing complete privacy for the entire transaction. In this way security-related data such as user identification and password, as well as sensitive transaction information can be protected from unauthorized access while in transit. This section is not a tutorial on TLS/SSL. It contains only information relating to WASD's use of it. See 4.9 SSL References for further information on TLS/SSL technology.

| WASD implements SSL using a freely available software toolkit supported by the OpenSSL Project. |

OpenSSL licensing allows unrestricted commercial and non-commercial use. This toolkit is in use regardless of whether the WASD OpenSSL package, HP SSL for OpenVMS product, or other stand-alone OpenSSL environment is installed. It is always preferable to move to the latest support release of OpenSSL as known bugs in previous versions are progressively addressed (ignoring the issue of new bugs being introduced ;-)

Be aware that export/import and/or use of cryptography software, or even just providing cryptography hooks, is illegal in some parts of the world. When you re-distribute this package or even email patches/suggestions to the author or other people, please PAY CLOSE ATTENTION TO ANY APPLICABLE EXPORT/IMPORT LAWS. The author of this package is not liable for any violations you make here.

Ralf S. Engelschall (rse@engelschall.com) is the author of the popular Apache mod_ssl package. This section is taken from the mod_ssl read-me and is well-worth some consideration for this and software security issues in general.

Have (or want) a TLS/SSL secured site?

Using self-signed or commercial server certificate(s)?

Let's Encrypt makes it possible to obtain and maintain browser-trusted certificates, simply, automatically and at no cost.

See WASD Certificate Management Environment (wuCME) on the WASD download page at https://wasd.vsm.com.au/wasd/

Secure Sockets Layer functionality is easily integrated into WASD and is available from one (or more) of the following sources. See for the basics of installing WASD SSL and for configuration of various aspects.

This is provided from the directory SYS$COMMON:[SSL111] containing shared libraries, executables and templates for certificate management, etc. If this product is installed and started the WASD installation and update procedures should detect it and provide the option of compiling and/or linking WASD against its shareable libraries.

WASD OpenSSL installation creates an OpenSSL directory in the source WASD_ROOT:[SRC.OPENSSL-n_n_n] (look for it here) containing the OpenSSL copyright notice, object libraries, object modules for building executables, example certificates, and some other support files and documentation.

The OpenSSL v1.1.1 code stream is supported. WASD requires a 32 bit OpenSSL build (the default).

To change linkage use step 2 described in selecting the alternate toolkit build.

OpenSSL v1.1.1 uses the naming schema OSSL$… for logical and file names. It also provides object libraries for a static linked executable, as well as shareable images, for the two main APIs (SSL and crypto). In common with the VSI SSL111 product, the shareable images must be installed to be used with the WASD server privileged executable. The WASD STARTUP.COM procedure will undertake this when directed (see immediately below).

There is one other consideration. For a privileged executable to activate a shareable image, not only must the image be installed but any associated logical names must be defined in executive (or kernel) mode. When executing the OpenSSL v1.1.1 startup procedure P1 must be "SYSTEM/EXECUTIVE" as in the following example:

SSL functionality can be installed with a new package, or with an update, or it can be added to an existing non-SSL enabled site. The following steps give a quick outline for support of SSL.

It is also possible to check the SSL package at any other time using the server demonstration procedure. It is necessary to specify that it is to use the SSL executable. Follow the displayed instructions.

The OPENSSL.EXE application is a command line tool for using the various cryptography functions of OpenSSL's crypto library from the shell. It is described being used several times in this section of the documentation. Refer to the OpenSSL Man page for descriptions of the various commands and their syntax.

It is commonly used as a foreign verb on VMS systems and assigned during SYLOGIN.COM or LOGIN.COM and depends on the distribution and version in use. For example:

A simple addition to SYLOGIN.COM or LOGIN.COM for WASD-specific OpenSSL kits to assign the OPENSSL verb is:

The example server startup procedure already contains support for the SSL executable. If this has been used as the basis for startup then an SSL executable will be started automatically, rather than the standard executable. The SSL executable supports both standard HTTP services (ports) and HTTPS services (ports). These must be configured using the [service] parameter. SSL services are distinguished by specifying "https:" in the parameter. The default port for an SSL service is 443.

WASD can configure services using the WASD_CONFIG_GLOBAL [SSL..] directives, the per-service WASD_CONFIG_SERVICE [ServiceSSL..] directives, or the /SSL= qualifier. Configuration precedence is WASD_CONFIG_SERVICE, /SSL= and finally WASD_CONFIG_GLOBAL.

SSL service configuration using the WASD_CONFIG_SERVICE configuration is slightly simpler, with a specific configuration directive for each aspect. (see Service Configuration of WASD Configuration). This example illustrates configuring the same services as used in the previous section.

As WASD uses the OpenSSL package in one distribution or another it largely supports all of the capability of that underlying package. The obsolete SSLv2, and the deprecated SSLv3 are no longer accepted by default. WASD default comprise the TLS family of protocols, at the time of writing, TLSv1, TLSv1.1, TLSv1.2 and TLSv1.3.

Some older clients employing SSLv3 may fail. Symptoms are dropped connection establishment and WATCH [x]SSL variously showing "SSL routines SSLn_GET_RECORD wrong version number", "SSL routines SSLn_GET_CLIENT_HELLO unknown protocol", possibly others. It is generally considered SSL best-practice not to have SSLv3 enabled but if required may be supported by configuring WASD_CONFIG_GLOBAL [SSLversion] with "SSLv3,TLSvALL", the per-service WASD_CONFIG_SERVICE equivalent, or using the /SSL=(SSLv3,TLSvALL) command line parameter during server startup.

TLSv1.3 perhaps should have been designated TLSv2.0 and not be considered as an incremental improvement over earlier versions of TLS but a significant upgrade!

TLSv1.3 can be tested for as demonstrated at ‘test TLS Version 1.3’ in 4.8 SSL Service Evaluation.

Ciphers are the algorithms, designed and implemented on mathematical computations, that render the readable plaintext into unreadable ciphertext. Ciphers tend to be available in suites (or families) where variants, usually based on key size and therefore resistence to decryption without a known key, that browsers and otheragents negotiate on and accept when setting up a secure (encrypted) network transports with servers.

Cipher selection is important to the overall security of the supported environment as well as the range of clients and servers that can establish communication due to shared cipher suites. Including only more recent (and technically secure) ciphers can preclude older clients from establishing secure connection, and including older (and perhaps more susceptible to modern attack) ciphers increases site vunerability. Some environments, for example HTTP/2, are quite prescriptive regarding the secure connection, to the point of blacklisting protocol versions and cipher suites no longer considered secure enough.

Fortunately a number of sites provide cipher guidelines based on requirements. The Mozilla Developer Network provides these amongst other useful information on security and server side TLS.

https://wiki.mozilla.org/Security/Server_Side_TLS

WASD has a default (built-in) functional cipher list that is general in application and relevant to when it was compiled. This in particular and site cipher lists in general, should be reviewed from time to time as opinions and requirements do change.

Many agents (browsers) require the elliptic curve ciphers provided by Forward Secrecy elements (4.5.5 Forward Secrecy) to negotiate later TLS versions.

The OpenSSL package provides for various options to be flagged against an TLS/SSL service. WASD sets the (OpenSSL) default options and then allows these to be overwitten/set/reset using hexadecimal values representing bit patterns. OpenSSL defaults are suitable for most sites.

The SSL options directives in global and per-service configuration, and the OPTIONS= keyword for the /SSL= qualifier, accept

Alternatively, the following OpenSSL option mnemonics can be used with a leading "+" to enable, or "-" to disable

Forward secrecy, sometimes known as perfect forward secrecy (PFS), is a property of key-agreement protocols ensuring that a session key derived from a set of long-term keys cannot be compromised if one of the long-term keys is compromised in the future.

http://en.wikipedia.org/wiki/Forward_secrecy

OpenSSL supports forward secrecy using Diffie-Hellman key exchange with elliptic curve cryptography and this relies on generating emphemeral keys based on unique, safe prime numbers. These are expensive to generate and so this is done infrequently, often during software build or installation. In the case of WASD, to maximise flexibility, these numbers are stored in external PEM-format files, by default located in the WASD_ROOT:[LOCAL] directory. These files are only briefly accessed during server startup SSL initialisation and the content later used during network connection SSL negotiation to generate the required ephemeral keys.

PFS requires a small number of elements working in concert

The detail is described in these references

Executing the WASD OpenSSL procedure

Alternatively, generated directly at the command-line using the OpenSSL dhparam utility, as in these examples;

Alternatively, files containing emphemeral keys generated freshly with each release, may be copied from the WASD OpenSSL package using

When a TLS/SSL connection is initiated an expensive handshake (in terms of time and compute) is required to establish the cryptographic and other elements of the connection. Mitigation of this expense is undertaken by allowing the resumption of a previous session (abbreviating the handshake exchanges) using connection state stored either at the server or at the client.

This TLS extension provides the connection state to the client, encrypted with keys available only to the server. The client stores the (encrypted) state and when (re-)connecting to the server provides that ticket in the initial part of the handshake. The server decrypts the ticket and if valid expedites the connection by resuming the previously negotiated session. This is the more modern, almost universally supported mechanism and is generally enabled by default.

Session tickets introduce a potential vulnerability to TLS security, in particular to the benefits of Forward Secrecy (PFS). If the ticket can be compromised, through theft of the keys or brute-force decryption attack, the entire session becomes vulnerable to attack. It is therefore advised to periodically rotate (change) the keys used by the server to encrypt the tickets. WASD does this every (RFC recommended) 24 hours, at midnight (local time).

Where a site is provided by multiple servers and connections distributed between these, session resumption using tickets relies on each server using the same keys. The current keys must be distributed to each server (using a secure mechanism) and this performed every time the keys are rotated. WASD uses the DLM to perform this for multiple per-node and cluster-wide instances as applicable.

In a full handshake the server sends a Session ID (unique, non-repeating value) as part of the handshake. On a subsequent connection the client can pass this session ID back to the server when connecting. To support session resumption via session IDs the server must maintain a cache that maps past session IDs to those sessions' states. The cache has limited capacity and is expensive for the server to maintain. If the session ID is still available in the cache the session can be resumed. This is the original session resumption mechanism.

Where a single WASD instance is involved the session cache is implemented in-memory. With multiple instances on a single node it is provided across those instances using a shared global section. The cacpacity of this shared cache is determined by the WASD_CONFIG_GLOBAL directives [SSLinstanceCacheMax] and [SSLinstanceCacheSize] directives. There is no cluster-wide session cache. When multiple instances are in use the shared session cache is enabled by default. Session ID caching may be globally disabled by setting [SSLsessionCacheMax] to -1.

With Session Tickets being the more modern, flexible and efficient solution to session resumption (and being available cluster-wide) it is recommended that WASD sites disable Session ID caching.

The default maximum period for session reuse is five minutes. This may be set globally using the [SSLsessionLifetime] directive or on a per-service basis using [ServiceSSLsessionLifetime].

To some extent, the relatively long-lived connections and lower concurrency with HTTP/2 means the importance of session resumption in improving request latency and connection overhead is reduced.

HTTP Strict Transport Security (HSTS) is a security policy mechanism which helps protect sites against protocol downgrade attack and cookie hijacking. It allows web servers to declare that browsers and other complying agents should only interact using secure (TLS) HTTP connections and never via clear-text HTTP. HSTS is an IETF standard specified in RFC 6797.

When global configuration directive [SSLstrictTransSec] is non-zero, or per-service configuration directive [ServiceSSLstrictTransSec] is non-zero, or a path is SET response=sts=<value>, TLS/SSL HTTP responses include a "Strict-Transport-Security: max-age=seconds" header field. Conforming agents note this period and refuse to communicate with the site via clear-text HTTP for the period represented by the integer number of seconds specified.

The server certificate is used by the browser to authenticate the server against the server certificate Certificate Authority (CA), in making a secure connection, and in establishing a trust relationship between the browser and server. By default this is located using the WASD_CONFIG_GLOBAL [SSLcert] or WASD_CONFIG_SERVICE [ServiceSSLcert] configuration directive, the WASD_CONFIG_SSL_CERT logical name, or using the /SSL= command-line qualifier, however if required. Each SSL service can have an individual certificate configured as in the example above.

The private key is used to validate and enable the server certificate. A private key is enabled using a secret, a password. It is common practice to embed this (encrypted) password within the private key data. This private key can be appended to the server certificate file, or it can be supplied separately. If provided separately it can be located using the WASD_CONFIG_GLOBAL [SSLkey] or WASD_CONFIG_SERVICE [ServiceSSLkey] configuration directive, tor using the WASD_CONFIG_SSL_KEY logical. When the password is embedded in the private key information it becomes vulnerable to being stolen as an enabled key. For this reason it is possible to provide the password separately and manually.

If the password key is not found with the key during startup the server will request that it be entered at the command-line. This request is made via the HTTPDMON "STATUS:" line (see OPCOM Logging of WASD Configuration), and if any OPCOM category is enabled via an operator message. If the private key password is not available with the key it is recommended that OPCOM be configured, enabled and monitored at all times.

When a private key password is requested by the server it is supplied using the /DO=SSL=KEY=PASSWORD directive (9.7 HTTPd Command Line). This must be used at the command line on the same system as the server is executing. The server then prompts for the password.

Multiple virtual SSL services (https:) sharing the same or individual certificates (and other characteristics) can essentially be configured against any host name (unique IP address or host name alias) and/or port in the same way as standard services (http:).

WASD SSL implements Server Name Indication (SNI), an extension to the TLS protocol that indicates what hostname the client is attempting to connect to at the start of the handshaking process. This allows a server to present multiple certificates on the same IP address and port number and hence allows multiple secure (HTTPS) websites (or any other Service over TLS) to be served off the same IP address without requiring all those sites to use the same certificate.

When the client presents an SNI server name during SSL connection establishment, WASD searches the list of services it is offering for an SSL service (the first hit) operating with a name matching the SNI server name. If matched, the SSL context (certificate, etc.) of that service is used to establish the connection. If not matched, the service the TCP/IP connection originally arrived at is used.

When authorization is in place (3. Authentication and Authorization) access to username/password controlled data/functionality benefits enormously from the privacy of an authorization environment inherently secured via the encrypted communications of SSL. In addition there is the possibility of authentication via client X.509 certification (4.5.12 Authorization Using X.509 Certification). SSL may be used as part of the site's access control policy, as whole-of-site, see 3.2 Authentication Policy, or on a per-path basis (see Request Processing Configuration of WASD Configuration).